- OrcaPulse

- Posts

- AI Job Trends to Watch in 2025: The Rise of Generative AI, LLMs, Advanced Prompt Engineering and Cutting-Edge Tools

AI Job Trends to Watch in 2025: The Rise of Generative AI, LLMs, Advanced Prompt Engineering and Cutting-Edge Tools

OrcaPulse JobAlert | Edition # 04

As we step into 2025, the domains of data science, artificial intelligence (AI), and machine learning (ML) are burgeoning with innovations that promise to redefine our interaction with technology. My exploration into the evolving landscape reveals a series of emerging trends and tools set to shape our approach to data and AI applications. Let’s delve into these transformative trends that are expected to dominate 2025 and beyond.

Next-Generation LLMs: Specialization and Multi-Modal Integration

The evolution of Large Language Models (LLMs) has been nothing short of revolutionary. Moving forward, I see a significant shift towards specialized models that cater to specific industries like healthcare, finance, and legal. These domain-specific LLMs are not just about enhanced accuracy; they are about contextual intelligence that aligns with industry standards and regulatory requirements. Furthermore, the advent of multi-modal models, which integrate text, image, and video, opens new avenues for interactive applications in marketing, entertainment, and more. Imagine a healthcare LLM that simultaneously processes patient histories, medical images, and lab results to assist diagnoses—a game-changer for medical professionals.

Advanced Prompt Engineering: Elevating Interaction Precision

Prompt engineering has rapidly matured, and in 2025, I anticipate more refined techniques that will allow us to tailor AI interactions with unprecedented precision. Dynamic prompting will enable real-time adjustments based on contextual cues and user feedback, enhancing personalization in services ranging from customer support to content creation. The development of prompt chaining and templates will empower even those without deep technical expertise to deploy effective AI tools, democratizing AI utility across sectors.

Ethics and Transparency: The Cornerstones of Trust

As AI becomes intricately woven into the societal fabric, maintaining ethical integrity and transparency is paramount. I expect the introduction of tools that elucidate AI decision-making processes, fostering trust and making AI more accessible and understandable. This is particularly critical in sectors like finance, where decisions need to be transparent and justifiable. Additionally, new frameworks for bias detection and mitigation will be crucial for cultivating ethical AI systems that promote fairness and inclusivity.

Data-Centric AI: Quality Over Quantity

The shift towards data-centric AI emphasizes the importance of quality data over mere volume. This approach not only ensures more reliable models but also aligns with the growing demands for responsible AI. Tools for data augmentation and automated cleansing will play pivotal roles in enhancing the integrity of data used in AI training, particularly in sensitive fields like autonomous driving and medical research.

Generative AI: Transforming Code and Software Development

Generative AI is poised to become a vital asset in software development. AI-driven code refactoring tools that suggest optimization in real-time and natural language to code conversion will streamline development processes. These tools promise to reduce the barrier to entry for software development and enable more professionals to translate their ideas into functional software without deep programming knowledge.

AI and ML for Sustainability and Climate Science

The application of AI in climate science and sustainability is an area I am particularly enthusiastic about. AI's potential to enhance predictive models and optimize resource management could be critical in addressing global environmental challenges. Real-time data analytics powered by AI will enable more informed decisions in energy management, pollution control, and resource allocation.

Real-Time Machine Learning (RTML) and Privacy-Preserving AI

The demand for instant insights has given rise to RTML, which I believe will become essential across various sectors, from fraud detection to personalized e-commerce experiences. Moreover, as privacy concerns escalate, technologies like federated learning will become fundamental in developing robust AI models without compromising data privacy.

Final Thoughts

The horizon for 2025 is vibrant and promising, with AI and data science set to offer more specialized, transparent, and high-quality solutions that prioritize ethical standards and privacy. For us in the field, staying abreast of these trends is not just beneficial—it is imperative to navigate the future landscape of technology effectively.

As we advance, the potential for AI to enhance and transform our professional and personal lives is boundless. Whether it's through building sophisticated LLMs, refining prompt engineering techniques, or driving innovations in data science, 2025 is gearing up to be an exhilarating year in AI and data science.

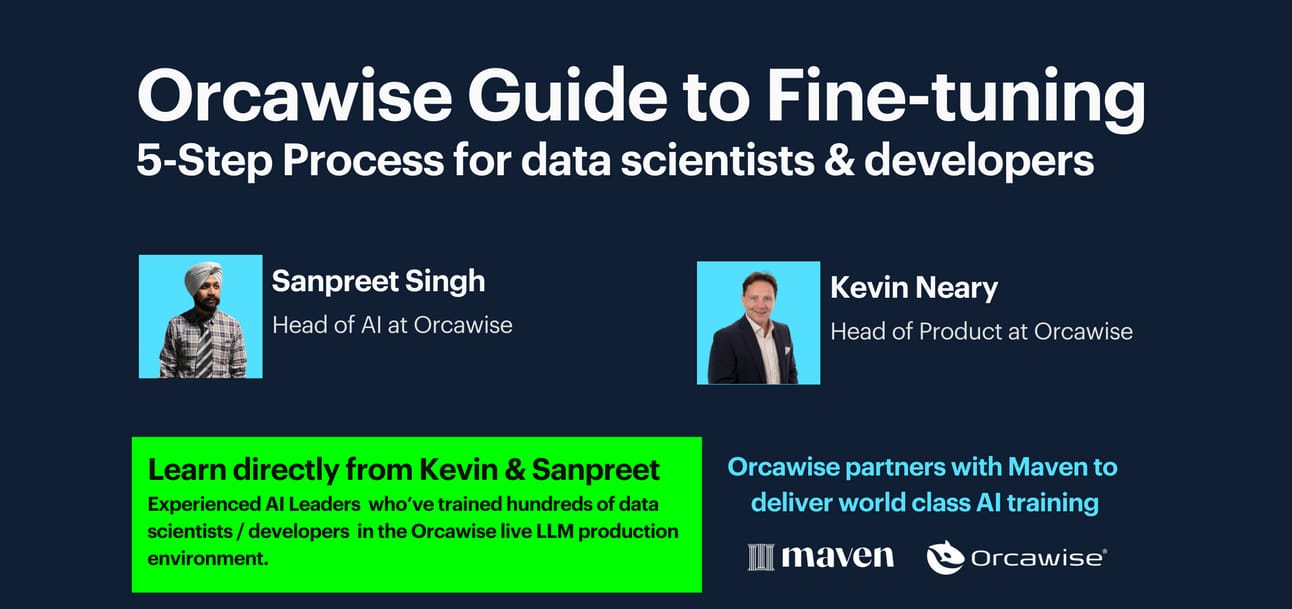

Stay in touch with AI skills demand in 2025 - join our Free Lightening Sessions as we demonstrate our 5-step process to Fine-tuning.

Sample of LLM Jobs (Remote)

The demand for AI roles requiring NLP / ML / LLM skills continue to grow. Here is a sample of remote jobs available in Europe.

Director of AI Software Engineering (UK) - LexisNexis

Head of Artificial Intelligenc - (Germany )- erg grouo

The Value of Lightening Sessions for AI Training

—

To truly capitalize on the potential of LLMs, training and continuous learning are key. Our free lightening sessions are designed not just to educate but to transform your approach to AI, making you a leader in this next wave of tech evolution. Don’t just adapt to the future; shape it by mastering the art of LLM fine-tuning with Orcawise.

These sessions run every Thursday 12.00 UTC / 07.00 EST for 30-40 minutes where we demonstrate how Orcawise fine-tunes LLM’s in a live production environment.

We follow a proven 5-step fine-tuning process and each week we focus on one stage:

1. Data preparation

2. Model evaluation and selection

3. Fine-tuning the model

4. Model testing and optimization

5. Model scaling

Register once and you have access to all the sessions and you will also get a free pdf copy of the Orcawise Guide to Fine-tuning.

Want to go deeper into LLM’s? Join our flagship course LLM Bootcamp Pro: It’s on the Maven Learning platform. New cohorts starting December and January.

Thank you for reading my OrcaPulse JobAlerts newsletter where we keep you up to date on AI job market trends, AI news, job alerts and free / paid training programs available.

.jpg)